Mechatronics Engineering, University of Waterloo

PROJECTS

On this page are some of my more notable projects that I've completed during my time in school and industry.

Automatic Apple Picker

For my fourth year design project I was part of a team that developed an automatic apple picker. I was responsible for the electrical design and for developing the AI. I also assisted with the vision, sensors integration and motor selection.

For the AI I developed a CNN that could detect the accuracy of the apple's species with 86% accuracy. Knowing the species of the apple tells us the average size. Using that, we can calculate how close the apple is to the camera using the number of pixels it occupies. The code for this is available on my Github. The video to the right demonstrates the final arm we ended up with.

Yelp Humour Analyzer

As part of my multi-sensor data fusion class, I developed a set of neural networks to predict how likely it is for a restaurant review to be voted as "funny" on Yelp. These networks used the publically available Yelp dataset to learn how to distinguish between funny and funny reviews.

I tested both CNNs LSTM networks to see which would perform better for this task, and gave the networks a vocabulary of the 10,000 most common words. The end result was an LSTM network with a test accuracy of 65% and a CNN with a test accuracy of 72%. The code for these is available on my Github.

Turtlebot

As part of my autonomous robotics class in fourth year I programmed a ClearPath turtlebot to perform a variety of tasks, including path-finding, and area mapping.

While working on this robot I gained a lot of experience in C++ and ROS which were used to program the robot. Additionally I also gained experience with LIDAR sensors and navigation algorithms such as Kalman Filters and Extended Kalman Filters

For more Information, see “Production Tracking” on Dot Automation’s service page

Production Monitoring System

While at Dot Automation, I designed and built a monitoring system for tracking the performance of industrial machines. This product was entirely my brainchild.

I did everything including designing and building the circuits, programming the microcontroller, setting up the data processing, and building a live monitor.

This system provided live updates on the machine performance, and indicates whether the machine is matching the required performance. It also saves the company money by reducing downtime. It achieves this by emailing the engineer as soon as the machine goes down.

The system also generates daily reports with information on machine performance and issues and emails these to the engineer in charge of the machine. All of this is achieved at a cost far below the market competition.

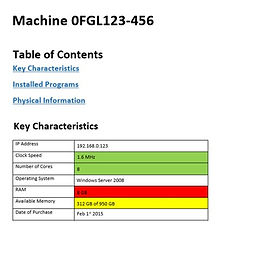

Monitoring Script

During my co-op at Avaya in Ireland, I developed a script for evaluating server performance files.

These files were often thousands of lines long and in plain text. As a result they were painful to look through.

My script took these files, and converted them into a much easier to navigate HTML page. Different performance characteristics of the systems were evaluated, and the results highlighted for easy viewing. Hyperlinks to different sections of the page also helped ease navigation.

Thanks to my script, search time was cut by as much as 75%. I have created a simple example of the results for this portfolio, which is shown adjacent. Actual code/results are unavailable for proprietary reasons.

Autonomous Submarine

During my 3rd year, I was the chief electrical designer for an autonomous underwater vehicle.

I implemented a distance detection system using lasers and a photo-diode, and a manual control system using a PS4 controller. I also assisted with creating an OpenCV machine vision system for obstacle detection, and depth detection using a barometric pressure sensor. Lastly, I also used an IMU to detect orientation and heading. All of this was done in Python on a Raspberry Pi using I2C communication.

By the end of the term the ROV was able to sense distance, depth, and orientation. It also had a basic machine vision system to allow for autonomous navigation of an underwater obstacle course.

Fuse Testing Circuit

As part of the designing the 2018 formula electric racecar, I designed and built a circuit for testing the car fuses for the formula electric student team.

The circuit tests fuses at currents ranging from 200A to 2300A to check if/when they fail. These safety tests allow us to determine if the fuses will fail before they are placed in the car, where a failure could have serious consequences.

It uses an Arduino microcontroller to provide input voltage to the transistor and to record the data so that it can be evaluated later. A picture of the circuit without any fuses or batteries attached is shown on the right.

Data Acquisition Unit

I joined the formula electric race car student team in 2017. Every year, this team designs and builds a full sized electric race car from scratch. For the 2017 model, I assisted with the design of the DAU. I conducted all the building and circuit testing of this board, and assisted with the programming.

This unit allowed for recording of many key performance characteristics for the Formula Electric race car. These include things like position, velocity, acceleration, temperature, brake pressure, and others. This data allows the team to see what parts of the car are working as expected, and which are not through collecting live performance data. The main schematic is shown on the left.

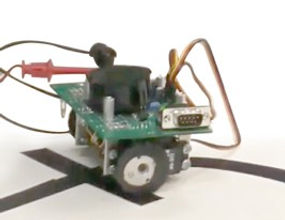

Line Following Robot

In my second year at Waterloo, I designed a line following robot as part of my MTE220 course.

As part of this project, I implemented a magnetic sensor, optical sensors, and filters from first principals. Over the course of the term I gained experience with creating Hall effect (magnetic) sensors, optical sensors, motor operation using PWM, and the use of filtering.

After soldering all the circuits for the robot, I programmed the robot so that it could follow dark lines, and stop when detected a magnetic field, and indicated the direction of the magnetic field using an on-board LED. The code for this robot is available on my GitHub.

The Impossible Game

While taking my course on real-time operating systems, I developed a video game for my final project. Using concepts such as multithreading and interrupts, as well as a number of peripherals, I was able to recreate a version of the popular online platformer, the impossible game. In the game, a red square tries to move rightwards as obstacles fly toward it.

A push button was used to control the jumping of the cube, while a potentiometer controlled the speed the environment was moving at. My program interpreted all of this information and used it to draw the players performance on the LCD screen.

The main code files for this project are available on my GitHub at https://github.com/VincentMcLoughlin/ImpossibleGameArmCortexBoard. A sample of what the game looked like is shown adjacent.